Acquire - Turning Legacy Data into Assets

To start a data science project, the first step is to identify and assess the available sources of data. We define this as the Acquire stage of the Data Science Lifecycle. Once you identify the data sources, you can use data science tools to efficiently extract, transform, and load (ETL) historical data into a usable format to be used in new ways.

Here’s an example of how it can work… You start the project by selecting a use case (scenario addressing a real business challenge). Suppose your scenario involved a research program with 10+ years of historical data stored in multiple formats (e.g., disks, emails, photos, shared network drives, thumb drives). These materials were generated to address a specific question or research purpose, and had limited value once that question was resolved. However, your use case showed you could gain incremental value from these legacy materials by using new tools to transform and prepare data for analysis and insights.

Quick Tips

- Lead a proof of principle project to understand a body of historical data that could have long term value to your company.

- Start by documenting metadata and create a place to store the data, enabling datasets to be searched and accessed for reuse. (Metadata captures information about datasets, such as the source, owner name, timeframe data was collected, and how the data can be used.)

- Leverage technology to make it easier and more systematic to transform historical data.

Getting Started

Discover

-

Begin with a discovery process to understand what exists, and create an inventory of potential datasets. Note that some data may be stored on external drives or in remote locations.

-

Create an inventory template to help guide the teams in cataloging data more uniformly.

-

Select a use case to test and validate technical solutions for integrating the data and using it.

Design

- Develop business and technical requirements.

- Design the process to extract, transformer, and load legacy data.

- Research and identify tools and technology to make this process scalable and repeatable.

Implement

* Select tools and technologies to perform processes for data extraction,transformation, and loading (ETL). * Identify gaps, such as exception processes, necessary for sensitive information or unusual file formats. * Share access to the newly aggregated data with team members.

With data science tools, importing spreadsheets into a query-able database is effortless. Transformed data changes into a new structure from horizontal rows of data – to vertical columns of data grouped by common variables. You can then use the new structure for data visualization and advanced analytics.

Key Insights

- Many companies have large quantities of data stored in older or obsolete formats If curated and made accessible, this data can be used to address today’s business problems.

- Data science tools and best practices facilitate access to historical datasets and analyses that can enable your organization to generate new insights.

- Machine learning and AI solutions need historical data to be curated and prepared as inputs. Successful data migration and access to older data sources can help advance your machine learning initiatives.

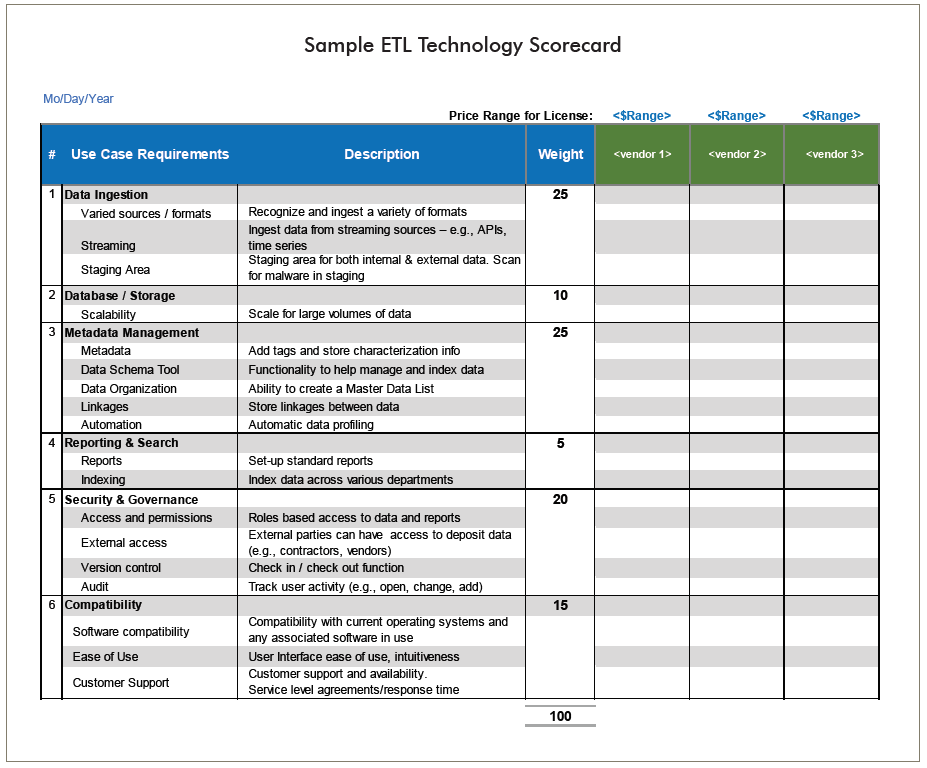

To help you evaluate ETL technology solutions, you can leverage our recommended list of business and technical requirements, and modify it for your organization. You can also use this sample scorecard to facilitate your efforts as your evaluate various ETL solutions.

Conclusion

By selecting a technology solution to extract, transform, and load data, you can develop a process to convert older, unused or forgotten data into assets that have ongoing value. When you implement a small proof of principle project, you help create a repeatable process that can be applied to other datasets. Over time, you can convert a substantial volume of legacy data to help address current and future business challenges.